处理crictl 和 ctr 命令的警告问题

WARN[0000] runtime connect using default endpoints: [unix:///var/run/dockershim.sock unix:///run/containerd/containerd.sock unix:///run/crio/crio.sock unix:///var/run/cri-dockerd.sock]. As the default settings are now deprecated, you should set the endpoint instead.

ERRO[0000] validate service connection: validate CRI v1 runtime API for endpoint "unix:///var/run/dockershim.sock": rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing: dial unix /var/run/dockershim.sock: connect: no such file or directory"

WARN[0000] image connect using default endpoints: [unix:///var/run/dockershim.sock unix:///run/containerd/containerd.sock unix:///run/crio/crio.sock unix:///var/run/cri-dockerd.sock]. As the default settings are now deprecated, you should set the endpoint instead.

ERRO[0000] validate service connection: validate CRI v1 image API for endpoint "unix:///var/run/dockershim.sock": rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing: dial unix /var/run/dockershim.sock: connect: no such file or directory" 设置 crictl 的endpoint 为其中一个对应你环境使用的,这样就没有警告了

crictl config runtime-endpoint unix:///run/containerd/containerd.sock查看配置

root@server4:~# crictl config --list

KEY VALUE

runtime-endpoint unix:///run/containerd/containerd.sock

image-endpoint

timeout 0

debug false

pull-image-on-create false

disable-pull-on-run false节点无法获取到pause的解决办法

因为是containerd所以修改containerd的文件,修改镜像的image就可以:

如果没有默认配置 /etc/containerd/config.toml 文件,则先生成一个默认的 containerd config default > /etc/containerd/config.toml,然后修改 此文件中的 pause image 为阿里云的registry.aliyuncs.com/google_containers/pause:3.9 ,重启containerd systemctl restart containerd 即可,成功后可以通过 crictl ps查看到 运行中的容器

root@server4:~# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

4dbc47500c4e7 f9c73fde068fd 24 seconds ago Running kube-flannel 2 f510ce2402955 kube-flannel-ds-dvxjt

b50860e65832f 9344fce2372f8 51 seconds ago Running kube-proxy 3 1a3c640873687 kube-proxy-kx8xfERROR FileContent–proc-sys-net-bridge-bridge-nf-call-iptables 问题

root@server6:~# modprobe br_netfilter

root@server6:~# echo "1">/proc/sys/net/bridge/bridge-nf-call-iptables

root@server6:~# echo "1">/proc/sys/net/bridge/bridge-nf-call-ip6tablesERROR FileContent–proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1问题

echo 1 > /proc/sys/net/ipv4/ip_forwardAPT 升级 k8s版本

sudo apt install --only-upgrade kubeadm kubectl kubeletkubeadm 升级 k8s 版本

可以先升级 kubeadm ,然后用 kubeadm 去升级其他的组件

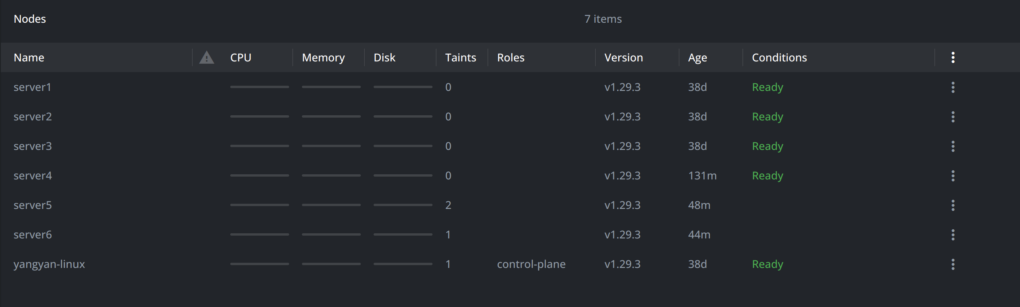

sudo kubeadm upgrade apply v1.29.3LXD vm 作为node加入后的遗留问题

1、kube-proxy 和 kube-flannel-ds 总是一直在不停的重启,日志中看不出来明显的问题,这些Node状态一会是 Ready 一会就NoReady,不断交替

flannel-ds的日志如下:

I0404 04:01:58.464181 1 kube.go:482] Creating the node lease for IPv4. This is the n.Spec.PodCIDRs: [10.244.1.0/24]

I0404 04:01:58.464609 1 kube.go:482] Creating the node lease for IPv4. This is the n.Spec.PodCIDRs: [10.244.2.0/24]

I0404 04:01:58.464814 1 kube.go:482] Creating the node lease for IPv4. This is the n.Spec.PodCIDRs: [10.244.3.0/24]

I0404 04:01:58.465028 1 kube.go:482] Creating the node lease for IPv4. This is the n.Spec.PodCIDRs: [10.244.5.0/24]

I0404 04:01:58.465219 1 kube.go:482] Creating the node lease for IPv4. This is the n.Spec.PodCIDRs: [10.244.7.0/24]

I0404 04:01:58.465386 1 kube.go:482] Creating the node lease for IPv4. This is the n.Spec.PodCIDRs: [10.244.8.0/24]

I0404 04:01:58.465550 1 kube.go:482] Creating the node lease for IPv4. This is the n.Spec.PodCIDRs: [10.244.0.0/24]

I0404 04:01:59.446826 1 kube.go:146] Node controller sync successful

I0404 04:01:59.447181 1 main.go:229] Created subnet manager: Kubernetes Subnet Manager - server5

I0404 04:01:59.447373 1 main.go:232] Installing signal handlers

I0404 04:01:59.448025 1 main.go:540] Found network config - Backend type: vxlan

I0404 04:01:59.448238 1 match.go:210] Determining IP address of default interface

I0404 04:01:59.449293 1 match.go:263] Using interface with name enp5s0 and address 10.163.76.180

I0404 04:01:59.449545 1 match.go:285] Defaulting external address to interface address (10.163.76.180)

I0404 04:01:59.449798 1 vxlan.go:141] VXLAN config: VNI=1 Port=0 GBP=false Learning=false DirectRouting=false

I0404 04:01:59.454117 1 kube.go:621] List of node(server5) annotations: map[string]string{"flannel.alpha.coreos.com/backend-data":"{\"VNI\":1,\"VtepMAC\":\"9a:bd:48:f1:ae:c7\"}", "flannel.alpha.coreos.com/backend-type":"vxlan", "flannel.alpha.coreos.com/kube-subnet-manager":"true", "flannel.alpha.coreos.com/public-ip":"10.163.76.180", "kubeadm.alpha.kubernetes.io/cri-socket":"unix:///var/run/containerd/containerd.sock", "node.alpha.kubernetes.io/ttl":"0", "volumes.kubernetes.io/controller-managed-attach-detach":"true"}

I0404 04:01:59.454467 1 vxlan.go:155] Setup flannel.1 mac address to 9a:bd:48:f1:ae:c7 when flannel restarts

I0404 04:01:59.455500 1 main.go:354] Setting up masking rules

I0404 04:01:59.474232 1 main.go:405] Changing default FORWARD chain policy to ACCEPT

I0404 04:01:59.482440 1 iptables.go:290] generated 7 rules

I0404 04:01:59.495141 1 main.go:433] Wrote subnet file to /run/flannel/subnet.env

I0404 04:01:59.495541 1 main.go:437] Running backend.

I0404 04:01:59.496246 1 iptables.go:290] generated 3 rules

I0404 04:01:59.497498 1 vxlan_network.go:65] watching for new subnet leases

I0404 04:01:59.497775 1 subnet.go:160] Batch elem [0] is { lease.Event{Type:0, Lease:lease.Lease{EnableIPv4:true, EnableIPv6:false, Subnet:ip.IP4Net{IP:0xaf40100, PrefixLen:0x18}, IPv6Subnet:ip.IP6Net{IP:(*ip.IP6)(nil), PrefixLen:0x0}, Attrs:lease.LeaseAttrs{PublicIP:0xc0a86465, PublicIPv6:(*ip.IP6)(nil), BackendType:"vxlan", BackendData:json.RawMessage{0x7b, 0x22, 0x56, 0x4e, 0x49, 0x22, 0x3a, 0x31, 0x2c, 0x22, 0x56, 0x74, 0x65, 0x70, 0x4d, 0x41, 0x43, 0x22, 0x3a, 0x22, 0x34, 0x61, 0x3a, 0x61, 0x62, 0x3a, 0x65, 0x36, 0x3a, 0x33, 0x65, 0x3a, 0x36, 0x30, 0x3a, 0x64, 0x37, 0x22, 0x7d}, BackendV6Data:json.RawMessage(nil)}, Expiration:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), Asof:0}} }

I0404 04:01:59.498172 1 subnet.go:160] Batch elem [0] is { lease.Event{Type:0, Lease:lease.Lease{EnableIPv4:true, EnableIPv6:false, Subnet:ip.IP4Net{IP:0xaf40200, PrefixLen:0x18}, IPv6Subnet:ip.IP6Net{IP:(*ip.IP6)(nil), PrefixLen:0x0}, Attrs:lease.LeaseAttrs{PublicIP:0xc0a86466, PublicIPv6:(*ip.IP6)(nil), BackendType:"vxlan", BackendData:json.RawMessage{0x7b, 0x22, 0x56, 0x4e, 0x49, 0x22, 0x3a, 0x31, 0x2c, 0x22, 0x56, 0x74, 0x65, 0x70, 0x4d, 0x41, 0x43, 0x22, 0x3a, 0x22, 0x30, 0x65, 0x3a, 0x34, 0x33, 0x3a, 0x34, 0x64, 0x3a, 0x36, 0x65, 0x3a, 0x37, 0x64, 0x3a, 0x31, 0x66, 0x22, 0x7d}, BackendV6Data:json.RawMessage(nil)}, Expiration:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), Asof:0}} }

I0404 04:01:59.499240 1 subnet.go:160] Batch elem [0] is { lease.Event{Type:0, Lease:lease.Lease{EnableIPv4:true, EnableIPv6:false, Subnet:ip.IP4Net{IP:0xaf40300, PrefixLen:0x18}, IPv6Subnet:ip.IP6Net{IP:(*ip.IP6)(nil), PrefixLen:0x0}, Attrs:lease.LeaseAttrs{PublicIP:0xc0a86467, PublicIPv6:(*ip.IP6)(nil), BackendType:"vxlan", BackendData:json.RawMessage{0x7b, 0x22, 0x56, 0x4e, 0x49, 0x22, 0x3a, 0x31, 0x2c, 0x22, 0x56, 0x74, 0x65, 0x70, 0x4d, 0x41, 0x43, 0x22, 0x3a, 0x22, 0x62, 0x61, 0x3a, 0x38, 0x33, 0x3a, 0x32, 0x39, 0x3a, 0x34, 0x63, 0x3a, 0x36, 0x32, 0x3a, 0x66, 0x39, 0x22, 0x7d}, BackendV6Data:json.RawMessage(nil)}, Expiration:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), Asof:0}} }

I0404 04:01:59.499872 1 subnet.go:160] Batch elem [0] is { lease.Event{Type:0, Lease:lease.Lease{EnableIPv4:true, EnableIPv6:false, Subnet:ip.IP4Net{IP:0xaf40500, PrefixLen:0x18}, IPv6Subnet:ip.IP6Net{IP:(*ip.IP6)(nil), PrefixLen:0x0}, Attrs:lease.LeaseAttrs{PublicIP:0xaa34cb4, PublicIPv6:(*ip.IP6)(nil), BackendType:"vxlan", BackendData:json.RawMessage{0x7b, 0x22, 0x56, 0x4e, 0x49, 0x22, 0x3a, 0x31, 0x2c, 0x22, 0x56, 0x74, 0x65, 0x70, 0x4d, 0x41, 0x43, 0x22, 0x3a, 0x22, 0x61, 0x32, 0x3a, 0x33, 0x64, 0x3a, 0x37, 0x32, 0x3a, 0x37, 0x37, 0x3a, 0x61, 0x34, 0x3a, 0x65, 0x62, 0x22, 0x7d}, BackendV6Data:json.RawMessage(nil)}, Expiration:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), Asof:0}} }

I0404 04:01:59.500121 1 subnet.go:160] Batch elem [0] is { lease.Event{Type:0, Lease:lease.Lease{EnableIPv4:true, EnableIPv6:false, Subnet:ip.IP4Net{IP:0xaf40800, PrefixLen:0x18}, IPv6Subnet:ip.IP6Net{IP:(*ip.IP6)(nil), PrefixLen:0x0}, Attrs:lease.LeaseAttrs{PublicIP:0xaa34cb4, PublicIPv6:(*ip.IP6)(nil), BackendType:"vxlan", BackendData:json.RawMessage{0x7b, 0x22, 0x56, 0x4e, 0x49, 0x22, 0x3a, 0x31, 0x2c, 0x22, 0x56, 0x74, 0x65, 0x70, 0x4d, 0x41, 0x43, 0x22, 0x3a, 0x22, 0x65, 0x32, 0x3a, 0x62, 0x30, 0x3a, 0x38, 0x38, 0x3a, 0x65, 0x30, 0x3a, 0x39, 0x37, 0x3a, 0x37, 0x62, 0x22, 0x7d}, BackendV6Data:json.RawMessage(nil)}, Expiration:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), Asof:0}} }

I0404 04:01:59.501395 1 subnet.go:160] Batch elem [0] is { lease.Event{Type:0, Lease:lease.Lease{EnableIPv4:true, EnableIPv6:false, Subnet:ip.IP4Net{IP:0xaf40000, PrefixLen:0x18}, IPv6Subnet:ip.IP6Net{IP:(*ip.IP6)(nil), PrefixLen:0x0}, Attrs:lease.LeaseAttrs{PublicIP:0xc0a8006e, PublicIPv6:(*ip.IP6)(nil), BackendType:"vxlan", BackendData:json.RawMessage{0x7b, 0x22, 0x56, 0x4e, 0x49, 0x22, 0x3a, 0x31, 0x2c, 0x22, 0x56, 0x74, 0x65, 0x70, 0x4d, 0x41, 0x43, 0x22, 0x3a, 0x22, 0x34, 0x61, 0x3a, 0x38, 0x39, 0x3a, 0x65, 0x37, 0x3a, 0x36, 0x61, 0x3a, 0x36, 0x62, 0x3a, 0x62, 0x61, 0x22, 0x7d}, BackendV6Data:json.RawMessage(nil)}, Expiration:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), Asof:0}} }

I0404 04:01:59.511950 1 main.go:458] Waiting for all goroutines to exit

I0404 04:01:59.531712 1 iptables.go:283] bootstrap done

I0404 04:01:59.545530 1 iptables.go:283] bootstrap done

W0404 04:02:44.506878 1 reflector.go:347] pkg/subnet/kube/kube.go:462: watch of *v1.Node ended with: an error on the server ("unable to decode an event from the watch stream: http2: client connection lost") has prevented the request from succeeding

I0404 04:03:01.055790 1 main.go:524] shutdownHandler sent cancel signal...

W0404 04:03:01.055917 1 reflector.go:424] pkg/subnet/kube/kube.go:462: failed to list *v1.Node: Get "https://10.96.0.1:443/api/v1/nodes?resourceVersion=3706720": context canceled

I0404 04:03:01.055951 1 trace.go:205] Trace[685446889]: "Reflector ListAndWatch" name:pkg/subnet/kube/kube.go:462 (04-Apr-2024 04:02:45.552) (total time: 15503ms):

Trace[685446889]: ---"Objects listed" error:Get "https://10.96.0.1:443/api/v1/nodes?resourceVersion=3706720": context canceled 15503ms (04:03:01.055)

Trace[685446889]: [15.503206904s] [15.503206904s] END

E0404 04:03:01.055967 1 reflector.go:140] pkg/subnet/kube/kube.go:462: Failed to watch *v1.Node: failed to list *v1.Node: Get "https://10.96.0.1:443/api/v1/nodes?resourceVersion=3706720": context canceled

E0404 04:03:01.055984 1 subnet.go:145] could not watch leases: context canceled

I0404 04:03:01.055992 1 vxlan_network.go:79] evts chan closed

I0404 04:03:01.056007 1 main.go:461] Exiting cleanly...proxy日志如下:

E0404 04:04:24.566556 1 server.go:1039] "Failed to retrieve node info" err="Get \"https://192.168.0.110:6443/api/v1/nodes/server4\": dial tcp 192.168.0.110:6443: i/o timeout"

I0404 04:04:25.752692 1 server.go:1050] "Successfully retrieved node IP(s)" IPs=["10.163.76.180"]

I0404 04:04:25.755449 1 conntrack.go:58] "Setting nf_conntrack_max" nfConntrackMax=131072

I0404 04:04:25.793806 1 server.go:652] "kube-proxy running in dual-stack mode" primary ipFamily="IPv4"

I0404 04:04:25.794169 1 server_others.go:168] "Using iptables Proxier"

I0404 04:04:25.798810 1 server_others.go:512] "Detect-local-mode set to ClusterCIDR, but no cluster CIDR for family" ipFamily="IPv6"

I0404 04:04:25.799114 1 server_others.go:529] "Defaulting to no-op detect-local"

I0404 04:04:25.799375 1 proxier.go:245] "Setting route_localnet=1 to allow node-ports on localhost; to change this either disable iptables.localhostNodePorts (--iptables-localhost-nodeports) or set nodePortAddresses (--nodeport-addresses) to filter loopback addresses"

I0404 04:04:25.799898 1 server.go:865] "Version info" version="v1.29.3"

I0404 04:04:25.800222 1 server.go:867] "Golang settings" GOGC="" GOMAXPROCS="" GOTRACEBACK=""

I0404 04:04:25.805200 1 config.go:188] "Starting service config controller"

I0404 04:04:25.805432 1 shared_informer.go:311] Waiting for caches to sync for service config

I0404 04:04:25.805815 1 config.go:97] "Starting endpoint slice config controller"

I0404 04:04:25.806010 1 shared_informer.go:311] Waiting for caches to sync for endpoint slice config

I0404 04:04:25.807020 1 config.go:315] "Starting node config controller"

I0404 04:04:25.807268 1 shared_informer.go:311] Waiting for caches to sync for node config

I0404 04:04:25.905911 1 shared_informer.go:318] Caches are synced for service config

I0404 04:04:25.906336 1 shared_informer.go:318] Caches are synced for endpoint slice config

I0404 04:04:25.907703 1 shared_informer.go:318] Caches are synced for node config在 kvm 中的其他node没有这个问题,出现问题的node是在 lxd中创建的vm的node,如果有人知道是哪里的问题的话欢迎发邮件告诉我。277160299@qq.com